Authors: François-Marie Le Régent

Welcome back, quantum enthusiasts!

In our first exploration, “Understanding FTQC Part I”, we journeyed through the delicate world of quantum bits, or qubits, uncovering why errors are an inherent challenge in quantum computing. Let’s bring that closer to reality: Imagine a quantum algorithm for modelling molecular interactions. If we are not careful about how we design the quantum circuit, a single error occurring anywhere in the computation could propagate through the entangled quantum system and corrupt the entire simulation. Unlike classical computers where errors typically remain localized, quantum errors can spread rapidly through quantum gates, transforming what should be a precise molecular model into meaningless noise. This isn’t simply a matter of running any quantum circuit and hoping for the best, designing fault-tolerant circuits requires deliberate engineering to prevent error propagation. The challenge is particularly subtle. We need to detect and handle errors without destroying the delicate quantum information we are trying to protect, all while ensuring that our error-handling mechanisms don’t themselves become sources of additional errors. When such an algorithm is used in drug discovery for predicting solubility or toxicity, an undetected error could lead to costly synthesis and testing of ineffective drug candidates. This is why we ask the fundamental question: how do we verify that our quantum circuit designs can actually withstand the inevitable errors? Fault tolerance transforms quantum computing from an interesting but unreliable research tool into a dependable platform for solving real-world problems where accuracy isn’t just preferred, it’s essential.

We saw how Quantum Error Correction (QEC) acts like an orchestra conductor, distributing information across many qubits to protect the overall quantum “melody” even if a few “instruments” go slightly out of tune. We learned that the goal isn’t perfect hardware, but instead clever design that allows computation to proceed correctly even when individual components inevitably fail. In the “digital” setting, as opposed to the analog one, without fault-tolerance, the incredible computational potential of quantum systems remains theoretical rather than practical.

This brings us to the next big question: How do engineers make sure their designs achieve this robustness? How do we test and verify that a quantum circuit truly is fault-tolerant? That’s precisely what we’ll delve into in this post.

I. Verifying Fault-Tolerant Designs: Building on Part I

As explained in Part I, Quantum Error Correction (QEC) is the crucial technique to engineer this kind of robustness: cleverly encoding information across multiple physical qubits (like spreading a melody across an orchestra) so that errors can be detected and hopefully corrected without destroying the fragile quantum information.

In this post we shift focus from the why to the how, exploring the practical methods engineers use to rigorously test their quantum circuit designs. Our goal is thus to verify whether these designs truly live up to the fault-tolerant ideal: can they overcome potential component failures and still produce a trustworthy result? This involves simulating how circuits behave not just under perfect conditions, but also when specific errors are intentionally introduced, mimicking the challenges faced by real-world quantum hardware.

II. What Makes an Outcome Acceptable?

So, how do engineers check if a circuit design is fault-tolerant? We can think of it like stress-testing a new car design before it goes into production. Just as engineers subject a car to specific, controlled challenges to see how it responds to individual faults, quantum engineers do the same with circuits, probing how the system handles errors one at a time. To understand how a quantum circuit behaves under stress, engineers start by examining what happens when a single error occurs at a specific location. This “local” view allows them to trace exactly how one fault propagates through the circuit design. For each individual error scenario, there are three possible acceptable outcomes. These three outcomes define what we consider “acceptable” when testing individual error scenarios.

- The Correct Result: The circuit still produces the intended target quantum state. This means the injected error either had no actual impact on the final outcome or the circuit’s structure inherently corrected or compensated for it.

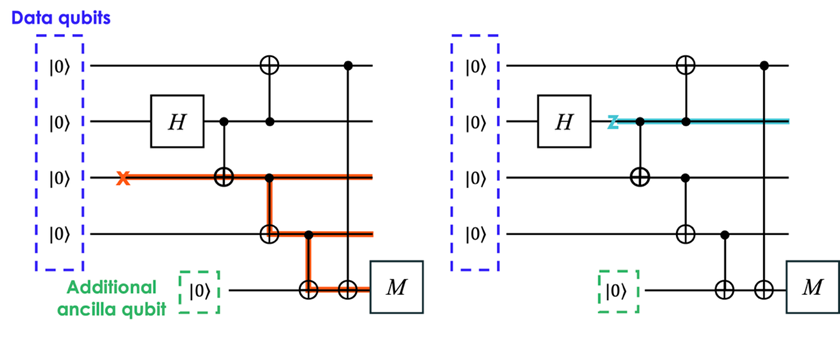

- A Flagged Error: The final state is incorrect, the error has potentially spread, but an error flag (like the ancilla qubit in our Part I example) is successfully activated, like shown in the left part of Fig 1.. This tells the control system, “Something went wrong here; discard this result and try again.” It prevents a corrupted state from being used later.

- A Localized Error: An error did occur and wasn’t flagged, but its effect is limited and has not spread, see the right part of Fig 1. Specifically, the final error affects at most one physical qubit within any given group that’s working together to protect a single piece of logical information (an encoded block). This is crucial because, as hinted in Part I, the broader QEC framework is designed to handle such localized errors. The error hasn’t spread uncontrollably, the result is valid.

Understanding what counts as an acceptable outcome is just the first step. The real challenge lies in methodically checking every possible error scenario in practice. But how exactly do engineers systematically evaluate each scenario and apply these criteria? This brings us to the practical toolkit that makes fault tolerance verification possible.

III. The Testing Process: From Simulation to Verification

Now that we understand what constitutes an acceptable outcome for any single error scenario, let’s explore the systematic process engineers use to evaluate circuit designs. It involves simulating how the circuit performs under various conditions:

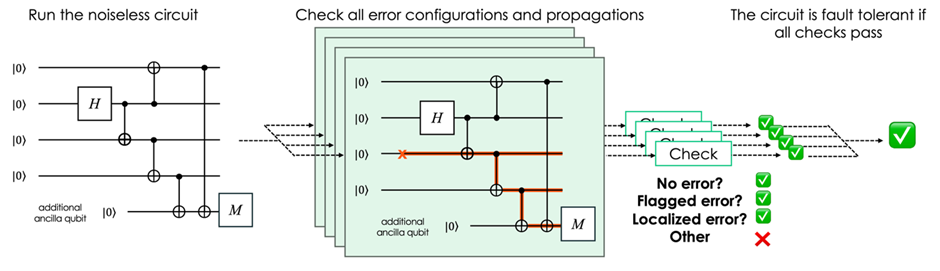

- Run Baseline Check (Ideal Simulation): First, engineers use numerical simulators on classical computers to confirm the circuit works perfectly as intended in an error-free, ideal world with perfect qubits. This verifies the fundamental logic of the design.

- Perform Stress Injection (Simulating Failures): Next, they identify all the possible locations of errors and deliberately introduce simplified errors into the simulation, one at a time. These represent common physical issues, like a qubit accidentally flipping its value (a bit-flip) or its phase (a phase-flip), or errors occurring during interactions between qubits (the entangling gates). By introducing these intentional faults, engineers can observe how the circuit responds to individual component failures.

- Analyze the Results: Engineers examine the outcome, the final output quantum state of all the error configurations, as discussed in section II. Even in the presence of errors, did all the circuits still produce the correct result or correctly raise a flag indicating an error occurred (like our ancilla qubit example with the GHZ state we explored in part I? Answering these questions helps pinpoint vulnerabilities in the design.

If a single simulated component failure leads to an unflagged error that corrupts multiple qubits within the same encoded block, the circuit design fails the fault-tolerance test. Such undetected errors, once spread, can become significantly harder, even impossible, for the QEC system to fix it. This definition directly addresses the core goal of fault tolerance as we discussed in Part I: preventing minor, local faults from escalating into catastrophic failures. In short, a circuit design is fault-tolerant only if no single component failure causes an unflagged error that corrupts multiple qubits within the same encoded block, see Fig 2.

- Handle Complexity (Beyond Full Simulation): Simulating every possible error in huge circuits can become computationally infeasible. Because of this limitation, engineers rely on alternative methods for complex designs. These include mathematical proofs based on the error correction codes used, and ensuring circuit designs follow established fault-tolerant patterns. This approach helps confirm robustness without the need to exhaustively simulate every potential failure.

Through this multi-faceted testing process, engineers gain confidence that a circuit design can effectively manage errors (avoid spreading or flagging).

IV. Conclusion: Engineering Reliability

Verifying fault tolerance isn’t magic, it’s a careful engineering process. It involves establishing that a circuit design works correctly first under ideal conditions, and then systematically stress-testing it by simulating how it would respond to individual component failures. By analysing whether the outcomes lead to the correct result, a flagged warning, or only a localized error, engineers can determine if the design meets the specific requirements of fault tolerance.

This fault-tolerance verification process, often starting with fundamental building blocks like state preparation circuits, and gradually scales up to more complex operations. This check corresponds ultimately to how we build confidence that our quantum algorithms will produce trustworthy results, even when running on inherently imperfect hardware.

Now, we clearly understand the inevitability of errors, and how to design and test the robustness of our computations against them. A corner piece of this construction is Quantum Error Correction. While we hinted several times at how this works, we will expose the inner workings of QEC in greater details in the next post.

V. Pasqal Spotlight

“Developing the ability to verify fault tolerance of circuits is a significant milestone on the path to building a full QEC stack, which is essential for reliable quantum computation. At Pasqal we are deeply engaged in this challenge as our hardware continues to mature.”

Adrien Signoles, Chief Hardware Officer – Pasqal

Want to hear how Pasqal is advancing toward fault-tolerant quantum computing and what’s next on our hardware and software horizon? Join our Quantum Roadmap Webinar on June 17 to get a front-row look at our strategic direction, the milestones we’re setting, and the technologies we’re building to reach them. Register here